Introduction

In the rapidly evolving world of artificial intelligence, trust is becoming just as important as raw model performance. Constitutional AI offers a concrete path to making advanced systems safer, more predictable, and more aligned with human values. This article breaks down how Anthropic AI’s approach to constitutional AI works and how platforms like SuperU’s voice AI agents can build on these ideas to earn long term user trust.

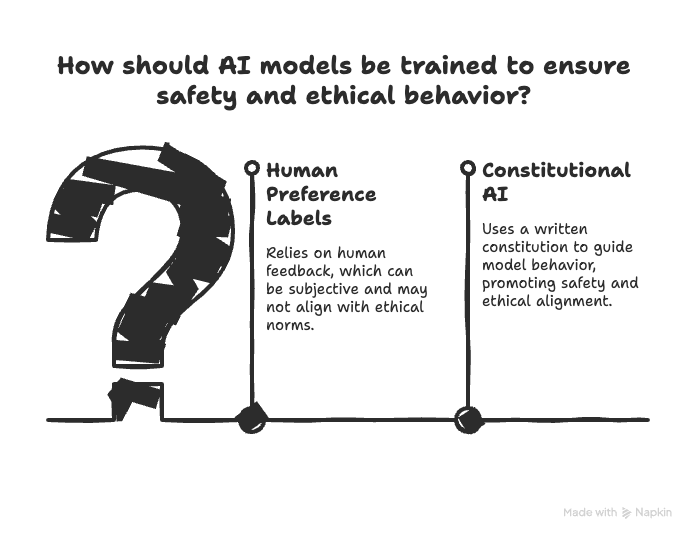

What Is Constitutional AI?

Anthropic AI introduced constitutional AI as a way to train models using a written “constitution” of high level principles instead of relying purely on human preference labels. During training, the model is guided to follow this constitution when deciding how to respond, which helps it avoid harmful behavior, respect user autonomy, and stay closer to shared ethical norms.

This approach sits at the intersection of ai safety, ai ethics, and ai alignment. Rather than patching problems after deployment, the constitution shapes the model’s incentives from the start, giving teams a more systematic way to reason about acceptable behavior. For readers who want the original technical framing, Anthropic’s “Constitutional AI: Harmlessness from AI Feedback” paper is the canonical reference.

Safety: Building Guardrails Into the Model

Traditional AI safety work focuses on preventing models from producing obviously harmful content or taking unsafe actions. Constitutional AI extends this by encoding safety rules directly into the model’s training loop, so safety is not just a filter on top but a core part of how the system works.

For example, principles around avoiding self harm content, refusing to assist with malicious intent, or deferring when unsure can all be written into the constitution. That makes it easier for downstream platforms like SuperU’s AI call automation to inherit strong baseline safety guarantees for voice agents that handle sensitive customer conversations.

Ethics: Tackling Bias and Fair Treatment

Public concern around ai ethics often centers on bias, unfair treatment, or models amplifying existing inequalities. Constitutional AI confronts this by including principles that explicitly call for impartiality, respect, and non discrimination, and then using those principles to steer model behavior.

No approach can completely eliminate bias, but a transparent constitution gives product teams something concrete to audit and improve. External resources that explain constitutional AI can help non technical stakeholders understand how these ethical constraints are implemented in practice.

Alignment: Keeping Models Close to Human Intent

AI alignment is about making sure models optimize for what people actually want, not just what the training data statistically suggests. Constitutional AI contributes here by turning values such as honesty, helpfulness, and respect for privacy into explicit instructions that shape the model’s responses.

Over time, these constitutions can be updated using public input or domain specific norms, as explored in research on collective constitutional AI. For a platform like SuperU’s virtual contact center, this means voice agents that stay aligned with brand tone, sector regulations, and customer expectations even as models evolve.

Real World Implications for Regulation and Compliance

As governments introduce stricter regulation around AI, organizations need evidence that their systems follow robust responsible AI development practices. A written constitution, paired with audit logs of how it influences model behavior, gives regulators something more concrete than generic statements about caring about safety.

This is especially relevant for industries that already face heavy compliance requirements, such as healthcare, law firm, and financial services. When SuperU’s AI receptionists for law firms or healthcare call center solutions are built on top of constitution guided models, teams can more easily demonstrate how safety and fairness requirements are enforced across every call flow.

How SuperU.ai Can Build on Constitutional AI

SuperU.ai sits at the application layer, where abstract research needs to become concrete workflows. Applying constitutional ai ideas in this context can happen at three levels.

- Operational constitutions for voice agents: Define a domain specific constitution for call flows, covering consent, escalation rules, and prohibited actions, and ensure every SuperU voice agent scenario is evaluated against it.

- Transparent, explainable call handling: Provide dashboards that show which principle drove an agent’s refusal, escalation, or clarification prompt, echoing the transparency goals highlighted in leading AI safety research.

- Ongoing alignment reviews: Combine call transcripts, customer feedback, and regulatory updates into periodic reviews of the constitution, similar to how Anthropic refines Claude’s constitution over time.

By pairing research backed guardrails with product level design, SuperU can position its platform as a practical implementation of responsible AI development for voice and contact center use cases.

Conclusion: From Research to Reliable AI Experiences

Anthropic AI’s work on constitutional AI shows that AI systems can be trained to follow explicit, testable principles rather than opaque instincts. For application platforms like SuperU.ai, the opportunity now is to translate those principles into everyday products: AI receptionists that respect boundaries, call bots that can explain their decisions, and contact center agents that stay aligned with brand and regulatory expectations by default.

By grounding its roadmap in clear constitutions, transparent monitoring, and continuous alignment reviews, SuperU.ai can help define what trustworthy, fair, and reliable AI looks like in real customer interactions, well beyond the lab.

Also Read: Sentiment Analysis That Actually Changes What You Do Next

Constitutional AI: How Anthropic AI Is Redefining Trust